The AI Bubble Survival Guide

Why I stopped worrying about AI disruption and have got a long term view again

Inside the AI Bubble: Who’s Cashing Out, Who Gets Burned, and How to Survive

I spent two years paralyzed by AI uncertainty. Then I recognized the pattern — and started planning again.

Two years ago, unit economics was the only religion. Founders who couldn’t explain their path to profitability got shown the door. VCs wanted to see your burn multiple, your LTV/CAC, your runway math.

Now? Mention profitability in a pitch and watch the room go quiet. The only word that opens wallets is “AI.”

I’ve been at this long enough to find it both hilarious and exhausting. The cycles. The amnesia. The absolute certainty that this time the rules have changed.

They haven’t.

The Crowd Is Always Late. That’s Why I Trust Patterns, Not Hype.

Let me tell you a story that captures how this works.

When we started building HRV analysis at Welltory, VCs passed because their “expert advisors” said heart rate variability will not become a popular metric.

Then Apple put HRV on the Watch. Oura made it their headline metric. The thing that was “niche” became the hottest category overnight.

And now? HRV is everywhere. But now we think there are better options and one more time VCs say: “HRV is a must”. The crowd is late all the time because it’s driven by FOMO, not by insights.

This year, for the first time since ChatGPT dropped, I can actually plan for years ahead. Not because the fog lifted — but because I finally recognized what kind of fog it is. It’s liberating. So, I invite you to get a look at something that is very obvious when you search for patterns.

All Bubbles Crash at Stage 4. AI Is Entering Stage 4.

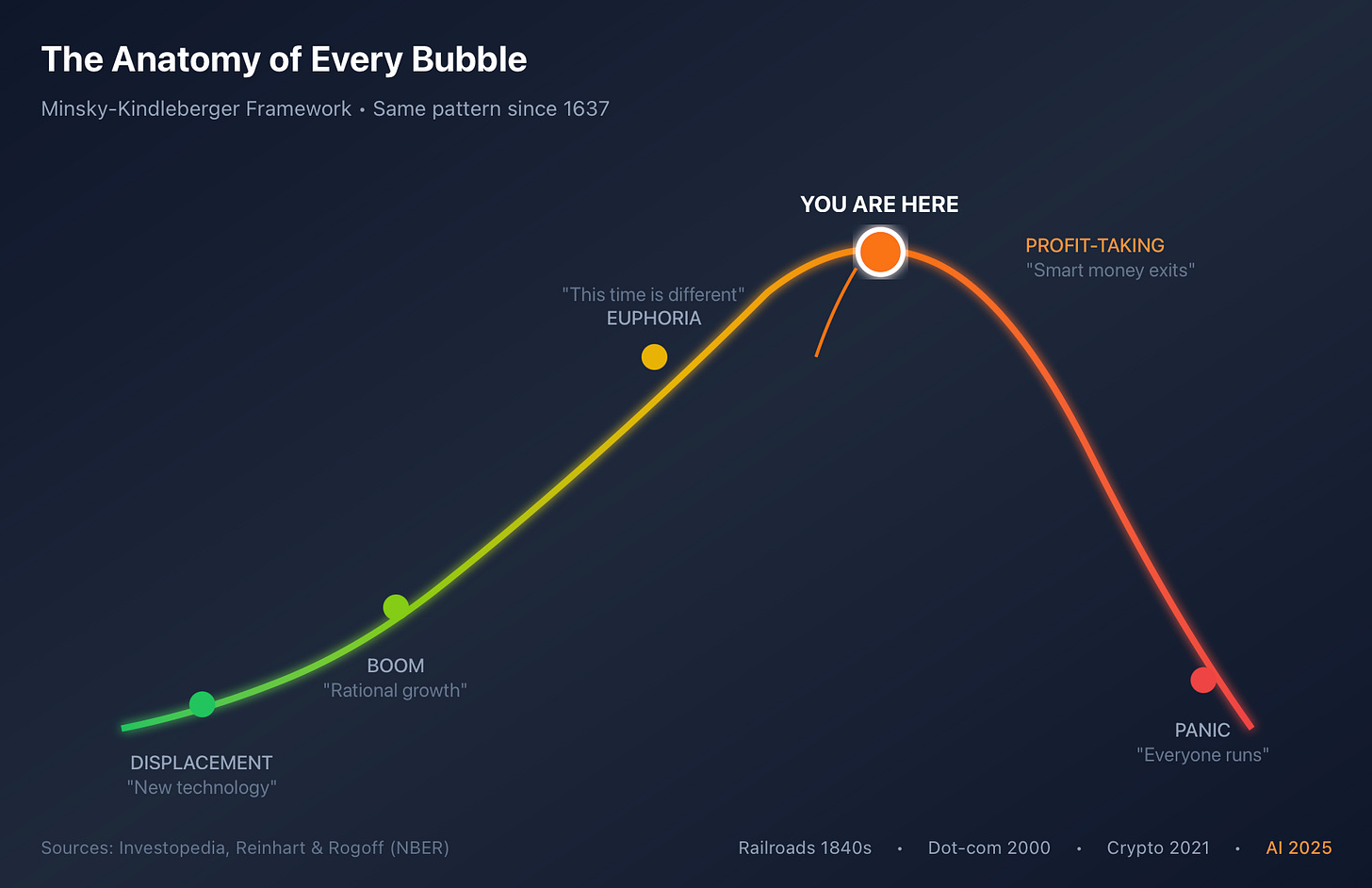

Economists figured out the anatomy of bubbles decades ago. Minsky, Kindleberger — they mapped the same five stages that show up in every bubble from tulips to railroads to dot-com to crypto:

Stage 1: Displacement. A genuinely new technology shows up. This part is usually real.

Stage 2: Boom. Prices go up, but rationally. Early people make money. Smart money pays attention.

Stage 3: Euphoria. “This time is different.” Valuations detach from anything resembling reality. Everyone’s a genius.

Stage 4: Profit-taking. Smart money quietly walks toward the exits while the music’s still playing.

Stage 5: Panic. Everyone realizes they need to leave. The door is not big enough.

Where are we now? Entering Stage 4. I’ll show you why.

The frustrating part: we know this. Reinhart and Rogoff studied 800 years of financial crises and found the same four words echoing through every single one: “This time is different.”

It never is.

Keynes had a darker take: “It is better for reputation to fail conventionally than to succeed unconventionally.” Which is why fund managers pile into the same overpriced deals. If everyone loses together, nobody gets fired. Miss the next Google alone, and you’re done.

There’s a more hopeful frame too. Carlota Perez argues that major technologies go through a “frenzy” phase of crazy overinvestment, then a painful turning point, then a “deployment” phase where the technology actually becomes useful. The internet bubble popped in 2000. The internet didn’t go away — it became the backbone of modern life.

AI will follow the same arc. The question isn’t whether there’ll be a correction. It’s who gets hurt when it happens.

Smart Money Is Exiting. Here’s How You Can Tell.

I’m not going to predict timing. Nobody can. But I can show you what the data says about which stage we’re in — and why it matters for your decisions.

$300B Spent on AI Infrastructure — With No Proof It Will Pay Off

According to the Financial Times, Amazon, Microsoft, Alphabet, and Meta are targeting over $300 billion in AI infrastructure spending for 2025 alone.

Webscale market data shows hyperscaler capex hit ~$304 billion in 2024 — up 56% year-over-year, a record driven by GenAI and data center expansion.

The money is being spent. The question nobody can answer yet: where’s the return? When infrastructure gets built at this pace without clear ROI, the market becomes extremely fragile. Any signal that the payoff isn’t coming triggers panic.

Someone Is Inflating This Bubble on Purpose. Follow the Money.

Here’s something that should make you pause.

In September 2025, NVIDIA announced plans to invest up to $100 billion in OpenAI to finance massive data center construction. Sounds impressive until you look at the structure.

NVIDIA gives OpenAI money. OpenAI uses that money to build data centers. Those data centers need GPUs. Who sells the GPUs? NVIDIA.

OpenAI’s CFO Sarah Friar said it plainly: “Most of the money will return to NVIDIA.”

This is vendor financing — the company selling you the product is lending you the money to buy it. It creates the appearance of demand while the money circulates within a closed loop.

We’ve seen this movie before. In the late 1990s, Cisco extended credit to customers so they could afford more routers. When those customers went bust, so did the loans. Cisco’s stock dropped 85% and took 20 years to recover.

The NVIDIA-OpenAI dance is more sophisticated, but the underlying dynamic is similar. Bloomberg described this as a “circular economy” where money flows between a small group of interconnected players:

Microsoft invests billions in OpenAI and provides Azure cloud credits

OpenAI uses Azure (driving Microsoft’s cloud revenue)

Microsoft needs more GPUs for Azure → buys from NVIDIA

NVIDIA invests in OpenAI → OpenAI buys more GPUs

Everyone’s revenue goes up. Everyone’s stock goes up. But the underlying cash is just... circulating. And if one player stumbles, the interconnections amplify the damage across the entire ecosystem.

This isn’t fraud. It might even work out. But it’s a structure that concentrates systemic risk — exactly what regulators are starting to notice.

OpenAI Has No Idea How to Break Even. They Admitted It.

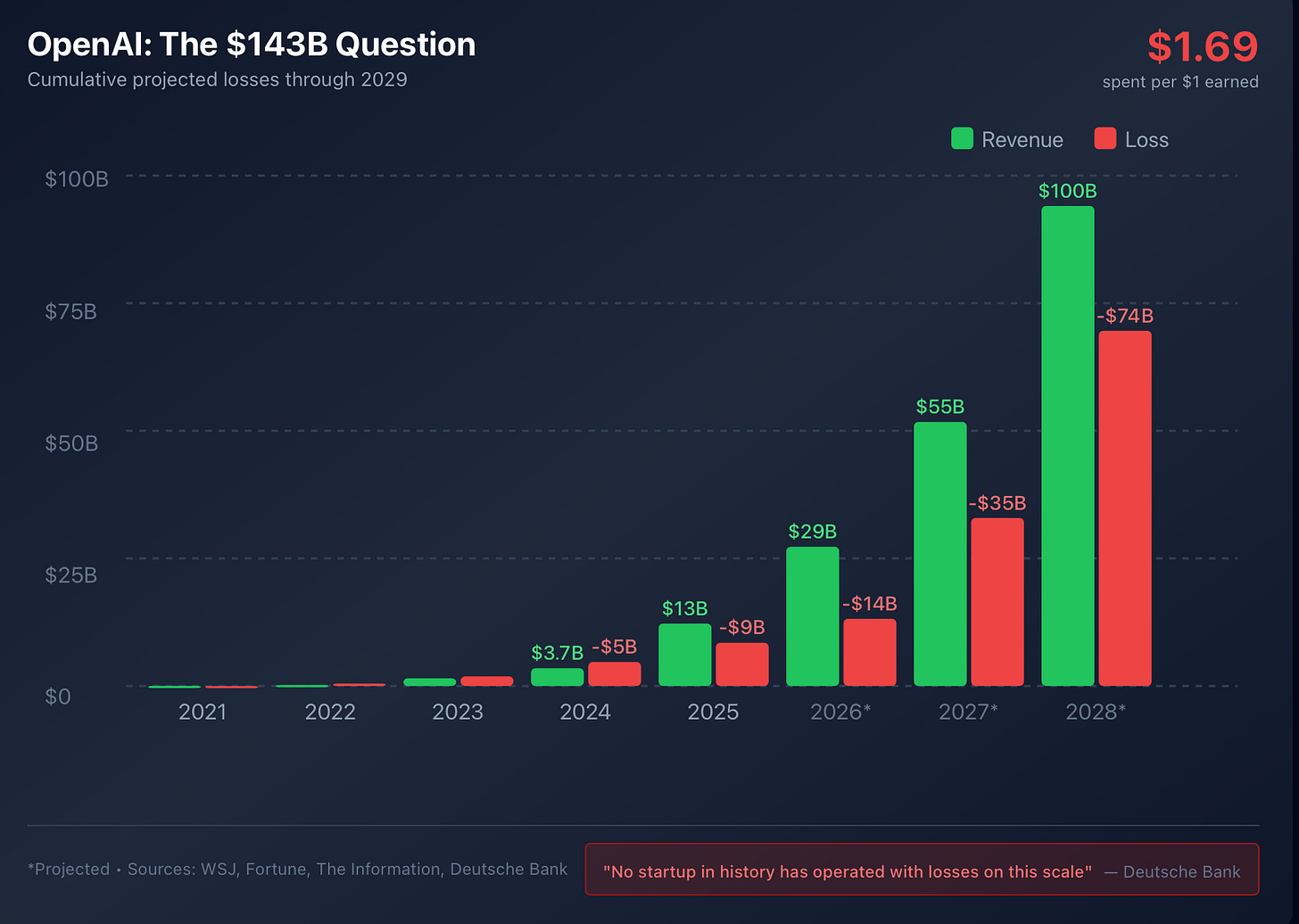

OpenAI’s growth is legitimately extraordinary. Revenue went from $28 million in 2022 to a projected $13 billion in 2025.

Here’s the problem: they’re spending $1.69 for every dollar they earn.

According to Fortune, OpenAI expects to lose around $9 billion in 2025 on $13 billion in revenue. By 2028, they project $74 billion in operating losses — in a single year. Cumulative losses through 2029? Somewhere between $115 billion and $143 billion.

Deutsche Bank put it bluntly: “No startup in history has operated with losses on anything approaching this scale.”

The most telling detail came from Sam Altman himself. On X, he admitted that OpenAI is losing money on its $200/month ChatGPT Pro subscription. “People use it much more than we expected,” he wrote.

Think about that. A $200/month subscription — expensive by any consumer software standard — and they’re still underwater on it. Because inference costs for frontier models are brutal, and power users will use every token they can get.

This isn’t a criticism of OpenAI’s technology. It’s an observation about economics. When your product gets more expensive to deliver the more people use it, you don’t have a software company. You have a very sophisticated utility with negative margins.

VCs Are Hoarding Cash “Just in Case.” That’s a Warning Sign.

The FT reports that AI startups in the US raised a record ~$150 billion in 2025 — higher than the previous peak of ~$92 billion in 2021. The explicit logic? “Build a fortress balance sheet in case 2026 gets ugly.”

Crunchbase data shows AI captured nearly 50% of all global VC funding in 2025, up from 34% in 2024. Total: $202 billion into AI alone.

When the smartest players in the room are stockpiling cash “just in case” — that tells you something about what they expect is coming.

When Employees Sell $6.6B at Peak Valuation, They Know Something.

Reuters reported that OpenAI hit a ~$500 billion valuation through a secondary sale where employees and former employees sold about $6.6 billion in shares. The company reportedly authorized over $10 billion in secondary sales total.

Secondary transactions aren’t bad by themselves. But a flood of secondary at peak hype? That’s a classic mechanism for early holders to transfer risk to later buyers.

The people who built OpenAI are cashing out. At record valuations. While telling everyone else to buy in.

CEOs Are Selling. Retail Investors Are Buying. Guess Who Loses.

Don’t listen to what executives say. Watch SEC Form 4 filings — what they actually do with their own shares.

NVIDIA’s CEO Jensen Huang has sold $1.9 billion+ in stock since 2024, executing sales consistently through 10b5-1 plans at peak valuations.

The aggregate picture: insider buy/sell ratios have dropped to 0.29 in 2025 — well below the historical average of 0.42-0.50. For every dollar insiders spend on their own stock, they’re selling $3.44.

Now — important caveat. Insider selling can mean lots of things: diversification, taxes, estate planning. Academic research shows aggregate insider activity has some predictive value for market returns, but it’s not a timing tool.

I’m not saying “crash imminent.” I’m saying: this is what Stage 4 looks like. Early holders are converting paper to cash while the music plays.

The Future Isn’t Giant Cloud Models. It’s AI on Your Phone.

Here’s where the story gets hopeful — if you’re paying attention.

While everyone debates whether we’re in a bubble, the actual product direction of AI is becoming clear. And it’s not what the hype suggests.

Apple and Google Are Building AI That Runs on Your Phone

Apple’s documentation is unambiguous:

“The cornerstone of Apple Intelligence is on-device processing.” — Apple Support

Their Private Cloud Compute is positioned as a fallback for complex tasks, with strong privacy guarantees. The default is local.

Android’s Gemini Nano is explicitly about processing “without sending your data off the device.” Google’s developer documentation makes the on-device emphasis clear.

Microsoft’s Phi-3 technical report describes their 3.8B parameter model as “small enough to be deployed on a phone” while maintaining strong reasoning capabilities. Their research page literally says “locally on your phone” in the title.

Meta’s Llama 3.2 release explicitly targeted edge and on-device deployment. The 1B parameter model card is designed for exactly this use case.

The point: This isn’t analysts speculating. The companies that control the platforms — iOS, Android, Windows — are building their AI strategies around on-device inference. Privacy, latency, cost. Those aren’t buzzwords; they’re product requirements.

A $6M Model Now Matches a $100M One. Moats Are Disappearing.

This isn’t ideology. It’s economics.

If your product is a daily-use app — health tracking, financial assistant, customer support, B2B workflow — then:

Latency matters more than +3% on benchmarks

Privacy is a feature, not a constraint

Inference cost becomes your gross margin

Andrew Ng has been pushing this for years. His framing of data-centric AI:

“The discipline of systematically engineering the data needed to build a successful AI system.”

The insight: most industries need custom models trained on their own data, not “one model to rule them all.”

DeepSeek proved this dramatically. Their V3 technical report documents training at roughly 2.788 million H800 GPU hours — a fraction of what frontier Western models reportedly require. Reuters covered their claim of a theoretical 545% daily cost-profit ratio on inference.

What matters for founders: when “good enough” quality becomes available at dramatically lower cost, the pricing power of expensive inference providers collapses. Business models shift toward compression, hybrid architectures, and workflow integration.

The model itself stops being the moat.

When Bubbles Pop, Founders Lose Everything. VCs Keep Their Fees.

Here’s what I wish someone explained to me earlier: in bubbles, the architects rarely suffer. The pain flows downhill.

The Teflon Layer

Microsoft structured its OpenAI deal carefully. They hold licensing rights to the technology. If OpenAI can’t pay its Azure bills, Microsoft — as major creditor and license holder — is well-positioned to acquire the technology through the debt. It’s not a bet on OpenAI’s independence. It’s a call option with downside protection.

NVIDIA generates $25+ billion in cash per quarter and has a $60 billion buyback authorization. If their stock drops 70% (like Cisco in 2000), the company doesn’t die. They buy back shares cheap, wait for the next cycle. Jensen has done this before — with crypto, with gaming.

The people holding the bag are retail investors who bought at the top, and pension funds with exposure to inflated valuations.

“Successful Exit” Can Mean Zero for Founders. Here’s the Math.

When you read that a startup raised at a $1B valuation, it’s natural to assume the founders got rich. Usually they didn’t. The valuation is mostly fiction — a number that sets share price but says nothing about who gets paid when things go sideways.

Late-stage investors increasingly demand liquidation preferences: contractual rights to get their money back (often 2x or more) before anyone else sees a dollar.

Quick example: Company raises $100M with 2x liquidation preference. Bubble deflates, company sells for $150M. Looks like success, right?

Investors are owed $200M (2x their investment). There’s only $150M. Investors take it all. Founders and employees get zero.

Years of work. Nothing.

Employees with options. Same math, worse leverage.

Pension funds and endowments. VCs manage other people’s money — teachers’ retirement, university endowments. When funds chase AI deals at 100x revenue, they’re betting those assets. The general partners already collected their 2% management fee. The LPs discover the damage years later.

“Wrapper” startups. Thousands of companies whose product is basically an API call plus a nice interface. They die the moment Apple or Microsoft builds the feature natively.

The VC Defense Is Already Written

Here’s how the post-mortem will read:

“Venture capital is a business of outliers. We had a fiduciary duty to participate in the AI opportunity. Yes, many investments didn’t work out, but that’s inherent to the asset class. The ones that succeeded will return the fund.”

They’ll pivot to quantum computing or synthetic biology or whatever the next cycle brings. The machine keeps running. The losses are always “normal” in hindsight.

I’m not saying VCs are bad people. I’m saying their incentive structure is designed to survive bubbles, not prevent them. That’s not a criticism. It’s just a fact founders need to understand.

Once You See the Pattern, You Can Finally Plan Again.

Okay, enough doom. Here’s where the story turns.

AI Is Not “The New Internet.” It’s Infrastructure.

The internet parallel is dangerous because it implies “winner take all” dynamics — that some AI company will become the next Google, and everyone else will fade away.

I don’t think that’s what’s happening.

The better parallel is the first machine learning revolution in the 2000s and 2010s. ML didn’t produce one dominant company. It became invisible infrastructure embedded in every application. Recommendation engines, fraud detection, search ranking, spam filters — ML is everywhere now, and mostly you don’t notice it.

The winners of that era weren’t the companies with the biggest models. They were the ones with the best data, the best evaluation frameworks, the best integration into actual workflows.

The same pattern is emerging now.

Where Moats Actually Come From

If models are commoditizing — and the evidence says they are — then where does defensibility come from?

Data and data rights. Unique datasets that can’t be easily replicated.

Evaluation capability. The ability to distinguish quality from hallucination, tied to business outcomes.

Workflow integration. Embedded in how people actually work. High switching costs.

Distribution. Channels and brand that don’t depend on a single platform.

Cost engineering. Controlling latency, COGS, reliability at scale.

What’s not a moat:

“We also have an LLM chat interface.”

If You Can’t Answer This Question, You Won’t Survive the Crash.

I’ll write a separate piece on specific strategic bets. For now, here’s what matters most.

The One Question

What happens to your company when you can’t make unit economics work — and venture capital goes back to demanding profitability?

If you don’t have an answer, you’re gambling with years of your life.

The Opportunity Is Clarity

I spent two years feeling destabilized by AI uncertainty. Every few months a new model dropped and I’d wonder if our whole roadmap was obsolete.

What changed wasn’t the technology. What changed was recognizing the pattern.

My message is simple: you can finally forecast again.

The hype will fade. AI will remain — as infrastructure, like electricity, like the internet post-2000. The winners won’t be whoever raised at the highest valuation or chased the biggest model. That path doesn’t make anyone happy. Building something meaningful and lasting does.

Happy New Year. Build your own clarity — and invest in that, not the crowd. At worst, you’ll learn something real. At best, you’ll build something that matters.